Sap Hana Multiple Host Installation Hardware

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical back up.

Install and configure SAP HANA (Large Instances) on Azure

In this commodity, we'll walk through validating, configuring, and installing SAP HANA Big Instances (HLIs) on Azure (otherwise known as BareMetal Infrastructure).

Prerequisites

Before reading this commodity, become familiar with:

- HANA Large Instances common terms

- HANA Big Instances SKUs.

Also see:

- Connecting Azure VMs to HANA Large Instances

- Connect a virtual network to HANA Large Instances

Planning your installation

The installation of SAP HANA is your responsibility. You tin can start installing a new SAP HANA on Azure (Large Instances) server later on yous establish the connectivity between your Azure virtual networks and the HANA Large Instance unit of measurement(southward).

Note

Per SAP policy, the installation of SAP HANA must exist performed by a person who's passed the Certified SAP Technology Associate test, SAP HANA Installation certification exam, or who is an SAP-certified system integrator (SI).

When you're planning to install HANA two.0, meet SAP support note #2235581 - SAP HANA: Supported operating systems. Brand sure the operating system (OS) is supported with the SAP HANA release you're installing. The supported Bone for HANA 2.0 is more restrictive than the supported OS for HANA 1.0. Confirm that the Bone release you're interested in is supported for the particular HANA Large Instance. Use this list; select the HLI to see the details of the supported Bone list for that unit.

Validate the post-obit before yous begin the HANA installation:

- HLI unit(s)

- Operating system configuration

- Network configuration

- Storage configuration

Validate the HANA Big Instance unit(s)

Subsequently you receive the HANA Big Instances from Microsoft, establish admission and connectivity to them. And so validate the following settings and adjust as necessary.

-

Bank check in the Azure portal whether the instance(s) are showing upwardly with the correct SKUs and OS. For more data, see Azure HANA Large Instances command through Azure portal.

-

Register the Bone of the case with your Bone provider. This step includes registering your SUSE Linux OS in an instance of the SUSE Subscription Management Tool (SMT) that'due south deployed in a VM in Azure.

The HANA Large Instance can connect to this SMT instance. (For more data, see How to set upwardly SMT server for SUSE Linux). If you're using a Reddish Hat Bone, it needs to be registered with the Red Hat Subscription Managing director that you'll connect to. For more information, see the remarks in What is SAP HANA on Azure (Large Instances)?.

This stride is necessary for patching the OS, which is your responsibility. For SUSE, meet the documentation on installing and configuring SMT.

-

Check for new patches and fixes of the specific Bone release/version. Verify that the HANA Large Instance has the latest patches. Sometimes the latest patches aren't included, then exist sure to bank check.

-

Check the relevant SAP notes for installing and configuring SAP HANA on the specific Bone release/version. Microsoft won't always configure an HLI completely. Changing recommendations or changes to SAP notes or configurations dependent on individual scenarios may go far incommunicable.

And then exist sure to read the SAP notes related to SAP HANA for your exact Linux release. As well check the configurations of the Bone release/version and utilise the configuration settings if you haven't already.

Specifically, bank check the following parameters and eventually adjust to:

- net.core.rmem_max = 16777216

- net.cadre.wmem_max = 16777216

- net.core.rmem_default = 16777216

- net.core.wmem_default = 16777216

- cyberspace.core.optmem_max = 16777216

- net.ipv4.tcp_rmem = 65536 16777216 16777216

- net.ipv4.tcp_wmem = 65536 16777216 16777216

Starting with SLES12 SP1 and Scarlet Chapeau Enterprise Linux (RHEL) seven.two, these parameters must exist set in a configuration file in the /etc/sysctl.d directory. For example, a configuration file with the proper name 91-NetApp-HANA.conf must be created. For older SLES and RHEL releases, these parameters must be ready in/etc/sysctl.conf.

For all RHEL releases starting with RHEL 6.3, keep in listen:

- The sunrpc.tcp_slot_table_entries = 128 parameter must be ready in/etc/modprobe.d/sunrpc-local.conf. If the file doesn't be, create it get-go by adding the entry:

- options sunrpc tcp_max_slot_table_entries=128

-

Check the system time of your HANA Large Instance. The instances are deployed with a system fourth dimension zone. This time zone represents the location of the Azure region in which the HANA Large Instance postage is located. You can alter the organisation time or time zone of the instances you own.

If y'all society more instances into your tenant, you need to conform the time zone of the newly delivered instances. Microsoft has no insight into the organisation time zone you set up with the instances subsequently the handover. And then newly deployed instances might not be set in the same time zone as the one y'all inverse to. Information technology's up to you to adapt the time zone of the example(s) that were handed over, as needed.

-

Bank check etc/hosts. Every bit the blades go handed over, they have different IP addresses assigned for different purposes. Information technology'southward important to check the etc/hosts file when units are added into an existing tenant. The etc/hosts file of the newly deployed systems may not be maintained correctly with the IP addresses of systems delivered before. Ensure that a newly deployed instance can resolve the names of the units yous deployed earlier in your tenant.

Operating organisation

The swap space of the delivered Os image is prepare to 2 GB according to the SAP back up note #1999997 - FAQ: SAP HANA memory. If you want a unlike setting, yous must set up it yourself.

SUSE Linux Enterprise Server 12 SP1 for SAP applications is the distribution of Linux that's installed for SAP HANA on Azure (Big Instances). This distribution provides SAP-specific capabilities, including pre-set parameters for running SAP on SLES effectively.

For several useful resources related to deploying SAP HANA on SLES, see:

- Resource library/white papers on the SUSE website.

- SAP on SUSE on the SAP Customs Network (SCN).

These resources include data on setting up high availability, security hardening specific to SAP operations, and more.

Here are more resources for SAP on SUSE:

- SAP HANA on SUSE Linux site

- Best Practise for SAP: Enqueue replication – SAP NetWeaver on SUSE Linux Enterprise 12

- ClamSAP – SLES virus protection for SAP (including SLES 12 for SAP applications)

The following documents are SAP support notes applicable to implementing SAP HANA on SLES 12:

- SAP support notation #1944799 – SAP HANA guidelines for SLES operating system installation

- SAP support note #2205917 – SAP HANA DB recommended OS settings for SLES 12 for SAP applications

- SAP support annotation #1984787 – SUSE Linux Enterprise Server 12: installation notes

- SAP support note #171356 – SAP software on Linux: General information

- SAP back up notation #1391070 – Linux UUID solutions

Reddish Hat Enterprise Linux for SAP HANA is another offering for running SAP HANA on HANA Large Instances. Releases of RHEL 7.2 and 7.3 are available and supported. For more than information on SAP on Red Chapeau, run across SAP HANA on Ruby Hat Linux site.

The following documents are SAP support notes applicable to implementing SAP HANA on Red Hat:

- SAP support annotation #2009879 - SAP HANA guidelines for Blood-red Lid Enterprise Linux (RHEL) operating organisation

- SAP support note #2292690 - SAP HANA DB: Recommended OS settings for RHEL 7

- SAP back up annotation #1391070 – Linux UUID solutions

- SAP support note #2228351 - Linux: SAP HANA Database SPS eleven revision 110 (or college) on RHEL six or SLES 11

- SAP support note #2397039 - FAQ: SAP on RHEL

- SAP support note #2002167 - Crimson Lid Enterprise Linux seven.x: Installation and upgrade

Time synchronization

SAP applications built on the SAP NetWeaver compages are sensitive to time differences for the components of the SAP organisation. SAP ABAP short dumps with the error championship of ZDATE_LARGE_TIME_DIFF are probably familiar. That's because these curt dumps announced when the system time of different servers or virtual machines (VMs) is globe-trotting likewise far apart.

For SAP HANA on Azure (Large Instances), fourth dimension synchronization in Azure doesn't apply to the compute units in the Large Instance stamps. It also doesn't apply to running SAP applications in native Azure VMs, because Azure ensures a organisation'southward fourth dimension is properly synchronized.

Equally a result, you need to set up a separate time server. This server volition exist used past SAP awarding servers running on Azure VMs. It will also exist used by the SAP HANA database instances running on HANA Large Instances. The storage infrastructure in Big Instance stamps is time-synchronized with Network Fourth dimension Protocol (NTP) servers.

Networking

In designing your Azure virtual networks and connecting those virtual networks to the HANA Large Instances, be sure to follow the recommendations described in:

- SAP HANA (Large Instance) overview and architecture on Azure

- SAP HANA (Big Instances) infrastructure and connectivity on Azure

Here are some details worth mentioning well-nigh the networking of the single units. Every HANA Large Instance unit comes with two or three IP addresses assigned to ii or 3 network interface controller (NIC) ports. Three IP addresses are used in HANA scale-out configurations and the HANA system replication scenario. One of the IP addresses assigned to the NIC of the unit is out of the server IP pool that's described in SAP HANA (Large Instances) overview and architecture on Azure.

For more than information nigh Ethernet details for your architecture, run into HLI supported scenarios.

Storage

The storage layout for SAP HANA (Big Instances) is configured by SAP HANA on Azure Service Management using SAP recommended guidelines. These guidelines are documented in SAP HANA storage requirements.

The rough sizes of the different volumes with the different HANA Big Instances SKUs is documented in SAP HANA (Large Instances) overview and architecture on Azure.

The naming conventions of the storage volumes are listed in the following table:

| Storage usage | Mountain name | Book name |

|---|---|---|

| HANA information | /hana/information/SID/mnt0000<m> | Storage IP:/hana_data_SID_mnt00001_tenant_vol |

| HANA log | /hana/log/SID/mnt0000<m> | Storage IP:/hana_log_SID_mnt00001_tenant_vol |

| HANA log backup | /hana/log/backups | Storage IP:/hana_log_backups_SID_mnt00001_tenant_vol |

| HANA shared | /hana/shared/SID | Storage IP:/hana_shared_SID_mnt00001_tenant_vol/shared |

| usr/sap | /usr/sap/SID | Storage IP:/hana_shared_SID_mnt00001_tenant_vol/usr_sap |

SID is the HANA instance Organisation ID.

Tenant is an internal enumeration of operations when deploying a tenant.

HANA usr/sap share the aforementioned volume. The nomenclature of the mountpoints includes the organization ID of the HANA instances and the mount number. In scale-up deployments, there'due south simply one mount, such every bit mnt00001. In scale-out deployments, y'all'll see as many mounts as you accept worker and primary nodes.

For scale-out environments, information, log, and log fill-in volumes are shared and attached to each node in the scale-out configuration. For configurations that are multiple SAP instances, a different fix of volumes is created and attached to the HANA Large Instance. For storage layout details for your scenario, see HLI supported scenarios.

HANA Large Instances come with generous disk volume for HANA/data and a volume HANA/log/fill-in. We made the HANA/data so large considering the storage snapshots apply the same disk volume. The more storage snapshots yous do, the more space is consumed past snapshots in your assigned storage volumes.

The HANA/log/backup volume isn't supposed to be the book for database backups. It'southward sized to be used as the fill-in volume for the HANA transaction log backups. For more information, encounter SAP HANA (Large Instances) high availability and disaster recovery on Azure.

You can increment your storage by purchasing extra capacity in 1-TB increments. This extra storage can be added equally new volumes to a HANA Large Case.

During onboarding with SAP HANA on Azure Service Management, you'll specify a user ID (UID) and group ID (GID) for the sidadm user and sapsys group (for example: 1000,500). During installation of the SAP HANA system, you lot must apply these same values. Because y'all want to deploy multiple HANA instances on a unit of measurement, you become multiple sets of volumes (ane gear up for each instance). And then at deployment time, yous need to define:

- The SID of the different HANA instances (sidadm is derived from it).

- The retentivity sizes of the different HANA instances. The memory size per case defines the size of the volumes in each individual volume set.

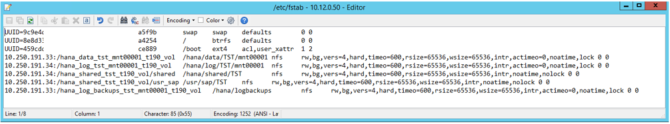

Based on storage provider recommendations, the post-obit mount options are configured for all mounted volumes (excludes boot LUN):

- nfs rw, vers=four, hard, timeo=600, rsize=1048576, wsize=1048576, intr, noatime, lock 0 0

These mountain points are configured in /etc/fstab every bit shown in the following screenshots:

The output of the command df -h on a S72m HANA Large Instance looks similar:

The storage controller and nodes in the Large Case stamps are synchronized to NTP servers. Synchronizing the SAP HANA on Azure (Big Instances) and Azure VMs against an NTP server is important. Information technology eliminates meaning fourth dimension drift between the infrastructure and the compute units in Azure or Large Instance stamps.

To optimize SAP HANA to the storage used underneath, fix the following SAP HANA configuration parameters:

- max_parallel_io_requests 128

- async_read_submit on

- async_write_submit_active on

- async_write_submit_blocks all

For SAP HANA 1.0 versions upwards to SPS12, these parameters can be set during the installation of the SAP HANA database, equally described in SAP note #2267798 - Configuration of the SAP HANA database.

Yous can also configure the parameters after the SAP HANA database installation past using the hdbparam framework.

The storage used in HANA Large Instances has a file size limitation. The size limitation is sixteen TB per file. Unlike file size limitations in the EXT3 file systems, HANA isn't aware implicitly of the storage limitation enforced past the HANA Large Instances storage. As a effect, HANA won't automatically create a new data file when the file size limit of 16 TB is reached. As HANA attempts to grow the file beyond xvi TB, HANA will study errors and the index server will crash at the end.

Of import

To foreclose HANA from trying to grow data files beyond the sixteen TB file size limit of HANA Large Case storage, ready the following parameters in the SAP HANA global.ini configuration file:

- datavolume_striping=truthful

- datavolume_striping_size_gb = 15000

- Run across also SAP note #2400005

- Exist enlightened of SAP note #2631285

With SAP HANA 2.0, the hdbparam framework has been deprecated. And then the parameters must be set by using SQL commands. For more data, see SAP note #2399079: Elimination of hdbparam in HANA 2.

Refer to HLI supported scenarios to learn more about the storage layout for your compages.

Side by side steps

Go through the steps of installing SAP HANA on Azure (Big Instances).

0 Response to "Sap Hana Multiple Host Installation Hardware"

Post a Comment